The resource requirements that we have described in , is directly related to how data centres are designed and operated.

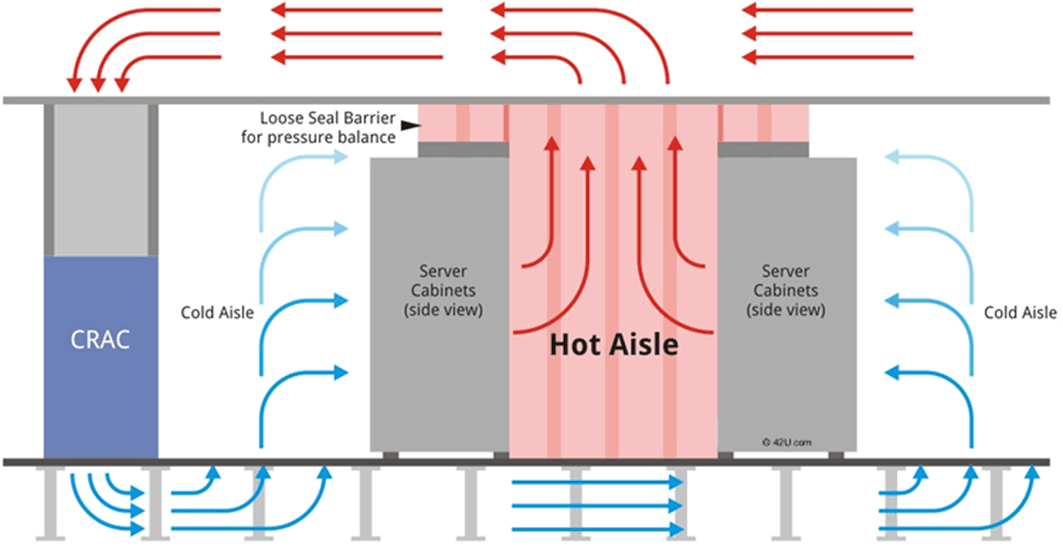

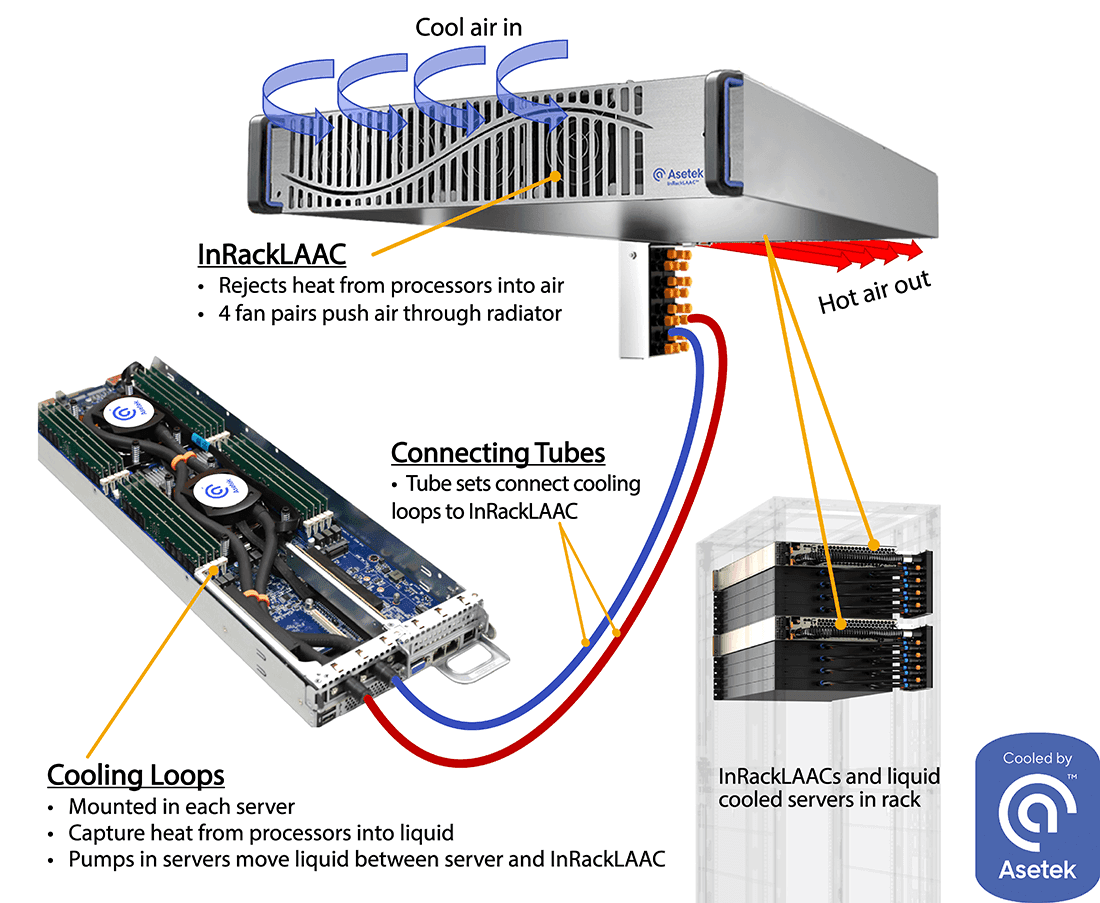

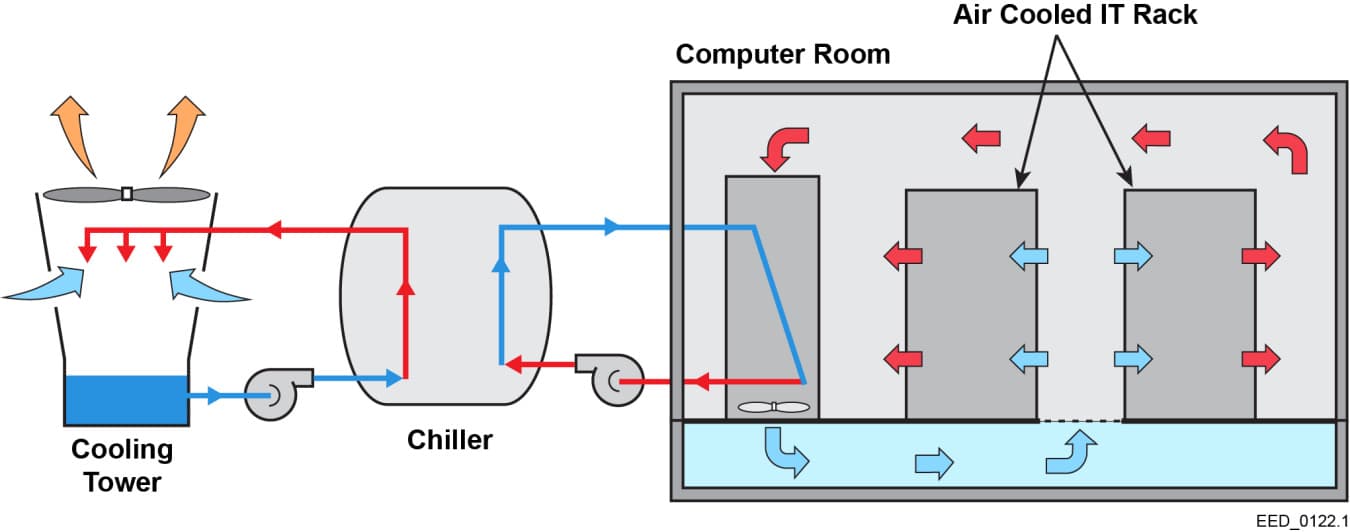

Data centres are dedicated complexes that host the servers and infrastructure that power our digital world - including AI-powered applications. Their operation requires significant power and advanced cooling systems to ensure optimal performance and protect key hardware from overheating.

How data centres work

Data centres are an essential infrastructure for the operation of (LLM), such as GPT or LLaMA. These models require significant computing resources, which places high demands on the energy efficiency of data centers.

Standard metrics assess data centre energy efficiency PUE (Power Usage Effectiveness), which shows how much of the total power consumed is actually used by IT equipment (servers, storage, network devices, GPUs, etc.).

PUE is calculated as the ratio of the total power consumption of the data centre to the power consumption of the IT equipment:

PUE = Total data centre energy consumption / IT equipment energy consumption

A value of 1.0 means perfect efficiency - all energy goes directly to the computing and nothing is wasted on cooling, lighting, etc. In practice, however, it is very difficult to achieve this value. For example, Google's data centres achieved an average PUE of 1.1 in 2022, with the best values being 1.07 . However, the global average was around 1.57 , indicating significant room for improvement.

Key components

- Connectivity

- It provides connectivity between devices inside the data center and to the outside world. It includes elements such as routers, switches, firewalls and application controllers. Fast and reliable data transfer is essential for effective LLM training and inferencing.

- Retrieved from

- It is used for data storage and backup. Includes hard drives, SSDs, tape drives and data backup and recovery systems. Fast NVMe SSDs are often used to minimize latency.